STYLY AI-Powered Image Generation:Text-to-Image Embedding

STYLY AI-Powered Image Generation:Text-to-Image Embedding

22 juin 2024

Table of Contents

Introduction

Unlocking the potential of AI-powered image generation has been a transformative development in the world of technology and design. The ability to create visually stunning images from simple text prompts has opened up new possibilities for creatives, interior designers, and anyone looking to bring their ideas to life. At the heart of this revolution lies a powerful technique called Clip (Contrastive Language-Image Pre-training), which has revolutionized the way we can interact with and manipulate digital images.

Understanding Text Embedding

In the world of AI and machine learning, the challenge of representing images in a way that can be understood and manipulated by language models has long been a topic of fascination. One approach, known as text embedding, involves converting text into numerical vectors that can be processed by neural networks. This allows for the creation of a shared numerical space where both images and text can be represented, enabling a range of powerful applications.

Table of Contents

Introduction

Unlocking the potential of AI-powered image generation has been a transformative development in the world of technology and design. The ability to create visually stunning images from simple text prompts has opened up new possibilities for creatives, interior designers, and anyone looking to bring their ideas to life. At the heart of this revolution lies a powerful technique called Clip (Contrastive Language-Image Pre-training), which has revolutionized the way we can interact with and manipulate digital images.

Understanding Text Embedding

In the world of AI and machine learning, the challenge of representing images in a way that can be understood and manipulated by language models has long been a topic of fascination. One approach, known as text embedding, involves converting text into numerical vectors that can be processed by neural networks. This allows for the creation of a shared numerical space where both images and text can be represented, enabling a range of powerful applications.

Table of Contents

Introduction

Unlocking the potential of AI-powered image generation has been a transformative development in the world of technology and design. The ability to create visually stunning images from simple text prompts has opened up new possibilities for creatives, interior designers, and anyone looking to bring their ideas to life. At the heart of this revolution lies a powerful technique called Clip (Contrastive Language-Image Pre-training), which has revolutionized the way we can interact with and manipulate digital images.

Understanding Text Embedding

In the world of AI and machine learning, the challenge of representing images in a way that can be understood and manipulated by language models has long been a topic of fascination. One approach, known as text embedding, involves converting text into numerical vectors that can be processed by neural networks. This allows for the creation of a shared numerical space where both images and text can be represented, enabling a range of powerful applications.

Table of Contents

Introduction

Unlocking the potential of AI-powered image generation has been a transformative development in the world of technology and design. The ability to create visually stunning images from simple text prompts has opened up new possibilities for creatives, interior designers, and anyone looking to bring their ideas to life. At the heart of this revolution lies a powerful technique called Clip (Contrastive Language-Image Pre-training), which has revolutionized the way we can interact with and manipulate digital images.

Understanding Text Embedding

In the world of AI and machine learning, the challenge of representing images in a way that can be understood and manipulated by language models has long been a topic of fascination. One approach, known as text embedding, involves converting text into numerical vectors that can be processed by neural networks. This allows for the creation of a shared numerical space where both images and text can be represented, enabling a range of powerful applications.

The Clip Approach

The Clip approach takes this concept a step further by training a model to jointly embed both images and text into a shared numerical space. This means that an image and the text that describes it will have similar numerical representations, allowing for seamless integration between the two modalities. The key to Clip's success lies in the massive scale of the training data used – often upwards of 400 million image-text pairs collected from the vast expanse of the internet.

Training the Clip Model

The training process for Clip involves two parallel neural networks – one for encoding images and one for encoding text. These networks are trained in a contrastive manner, where the goal is to ensure that paired image-text embeddings are closer together in the shared numerical space than non-paired embeddings. This approach allows the model to learn the inherent connections between visual and textual representations, paving the way for various applications.

Applying Clip in Downstream Tasks

One of the most exciting applications of Clip is its integration with image generation models like Stable Diffusion. The generation model can use this guidance to produce images that more closely match the desired concept by encoding the text prompt into the Clip embedding space. This allows for unprecedented control and creativity in the image generation process, unlocking new possibilities for interior designers, digital artists, and beyond.

The Clip Approach

The Clip approach takes this concept a step further by training a model to jointly embed both images and text into a shared numerical space. This means that an image and the text that describes it will have similar numerical representations, allowing for seamless integration between the two modalities. The key to Clip's success lies in the massive scale of the training data used – often upwards of 400 million image-text pairs collected from the vast expanse of the internet.

Training the Clip Model

The training process for Clip involves two parallel neural networks – one for encoding images and one for encoding text. These networks are trained in a contrastive manner, where the goal is to ensure that paired image-text embeddings are closer together in the shared numerical space than non-paired embeddings. This approach allows the model to learn the inherent connections between visual and textual representations, paving the way for various applications.

Applying Clip in Downstream Tasks

One of the most exciting applications of Clip is its integration with image generation models like Stable Diffusion. The generation model can use this guidance to produce images that more closely match the desired concept by encoding the text prompt into the Clip embedding space. This allows for unprecedented control and creativity in the image generation process, unlocking new possibilities for interior designers, digital artists, and beyond.

The Clip Approach

The Clip approach takes this concept a step further by training a model to jointly embed both images and text into a shared numerical space. This means that an image and the text that describes it will have similar numerical representations, allowing for seamless integration between the two modalities. The key to Clip's success lies in the massive scale of the training data used – often upwards of 400 million image-text pairs collected from the vast expanse of the internet.

Training the Clip Model

The training process for Clip involves two parallel neural networks – one for encoding images and one for encoding text. These networks are trained in a contrastive manner, where the goal is to ensure that paired image-text embeddings are closer together in the shared numerical space than non-paired embeddings. This approach allows the model to learn the inherent connections between visual and textual representations, paving the way for various applications.

Applying Clip in Downstream Tasks

One of the most exciting applications of Clip is its integration with image generation models like Stable Diffusion. The generation model can use this guidance to produce images that more closely match the desired concept by encoding the text prompt into the Clip embedding space. This allows for unprecedented control and creativity in the image generation process, unlocking new possibilities for interior designers, digital artists, and beyond.

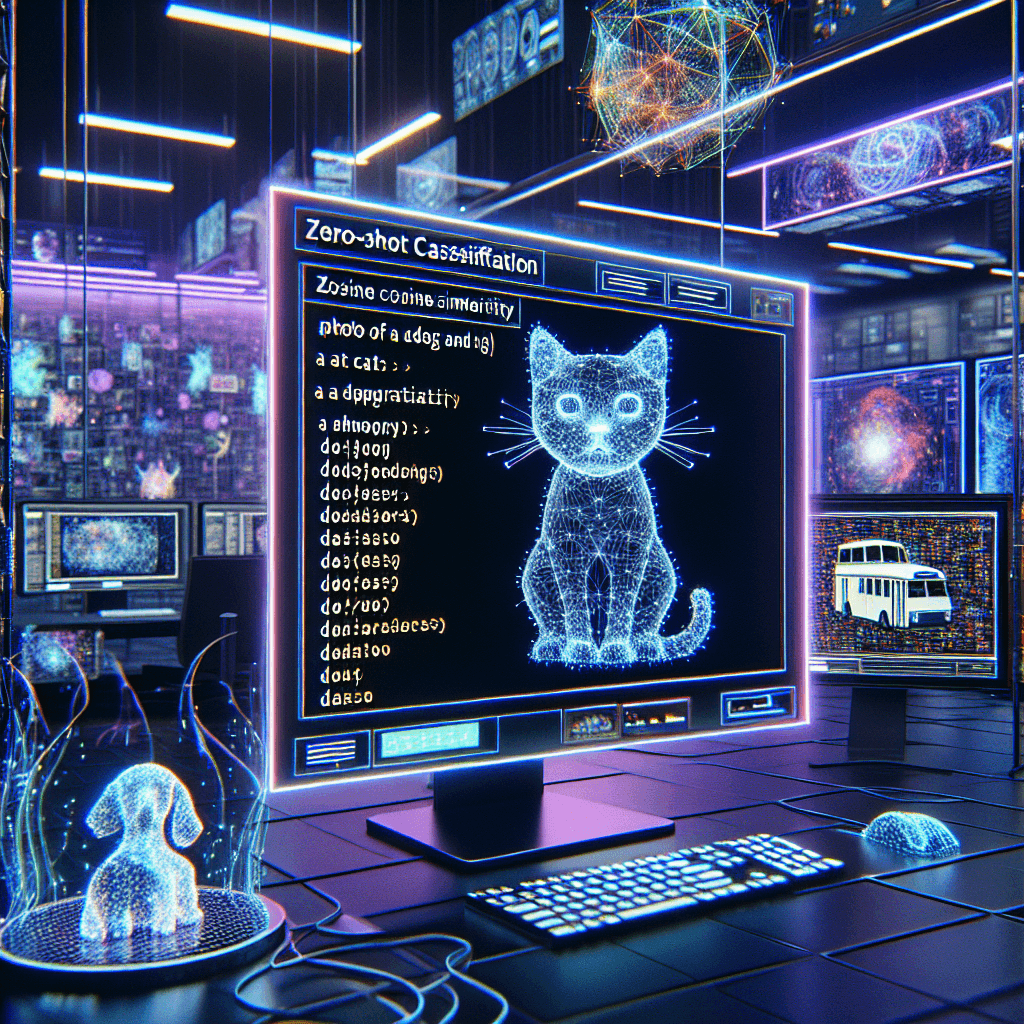

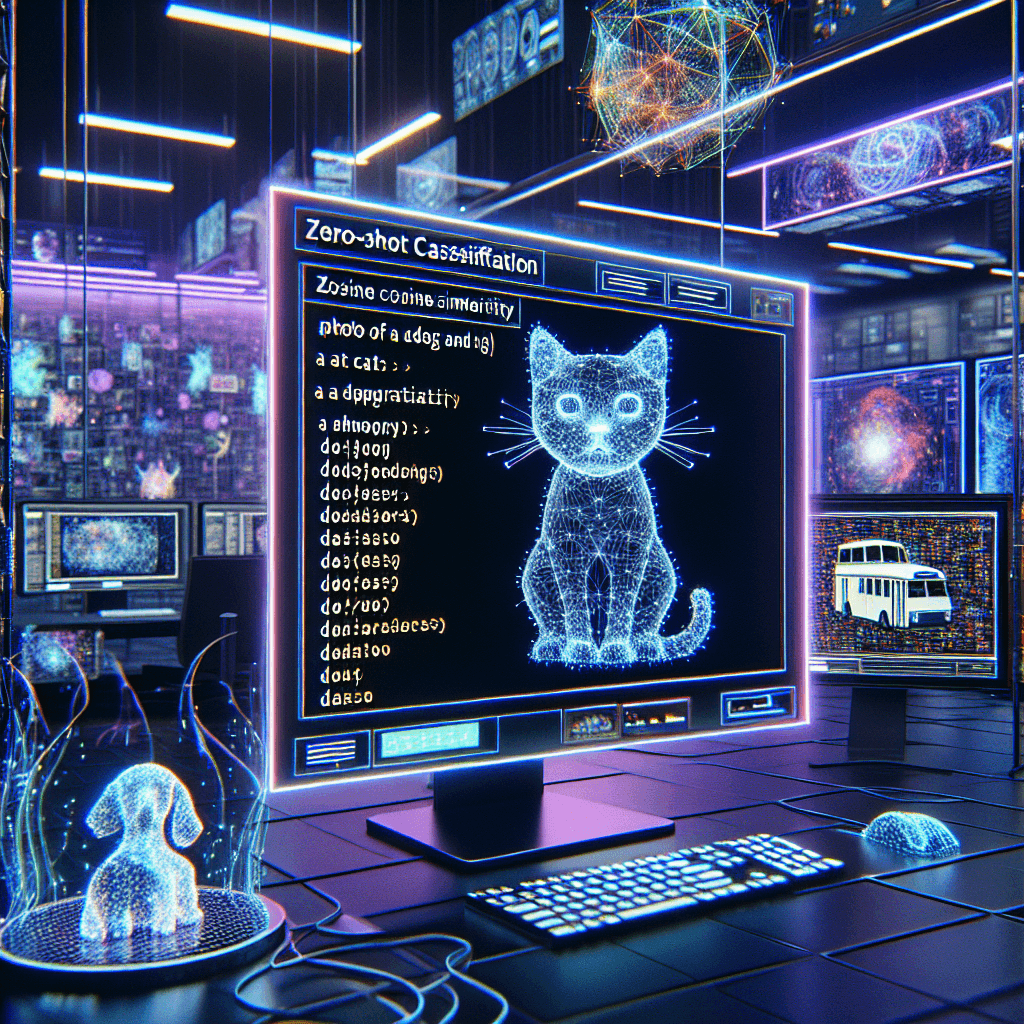

Zero-Shot Classification with Clip

The clip also offers the intriguing capability of zero-shot classification, where the model can classify images without any prior training on those specific classes. This is achieved by embedding a set of text prompts (e.g., "a photo of a cat," "a photo of a dog," "a photo of a bus") and then comparing the cosine similarity between the image embedding and each text embedding to determine the most likely class.

Conclusion

The integration of Clip and other text-to-image embedding techniques has revolutionized the world of digital image creation and manipulation. By bridging the gap between language and visuals, these AI-powered tools have empowered designers, artists, and everyday users to bring their ideas to life in new and exciting ways. As the technology continues to evolve, we can expect to see even more groundbreaking applications that push the boundaries of what's possible in the realm of AI-generated photo image editor free.

To learn more about the latest advancements in this field, be sure to follow Styly on Facebook, Instagram, and LinkedIn.

Zero-Shot Classification with Clip

The clip also offers the intriguing capability of zero-shot classification, where the model can classify images without any prior training on those specific classes. This is achieved by embedding a set of text prompts (e.g., "a photo of a cat," "a photo of a dog," "a photo of a bus") and then comparing the cosine similarity between the image embedding and each text embedding to determine the most likely class.

Conclusion

The integration of Clip and other text-to-image embedding techniques has revolutionized the world of digital image creation and manipulation. By bridging the gap between language and visuals, these AI-powered tools have empowered designers, artists, and everyday users to bring their ideas to life in new and exciting ways. As the technology continues to evolve, we can expect to see even more groundbreaking applications that push the boundaries of what's possible in the realm of AI-generated photo image editor free.

To learn more about the latest advancements in this field, be sure to follow Styly on Facebook, Instagram, and LinkedIn.